Arts & Entertainement

Kim Kardashian's Surprising Response to Taylor Swift’s 'TTPD' Feud Song!

Our latest posts

Trends

This Teenager Accepted Into More Than 50 Universities Now Receives $1.3 Million In Scholarships

Arts & Entertainement News

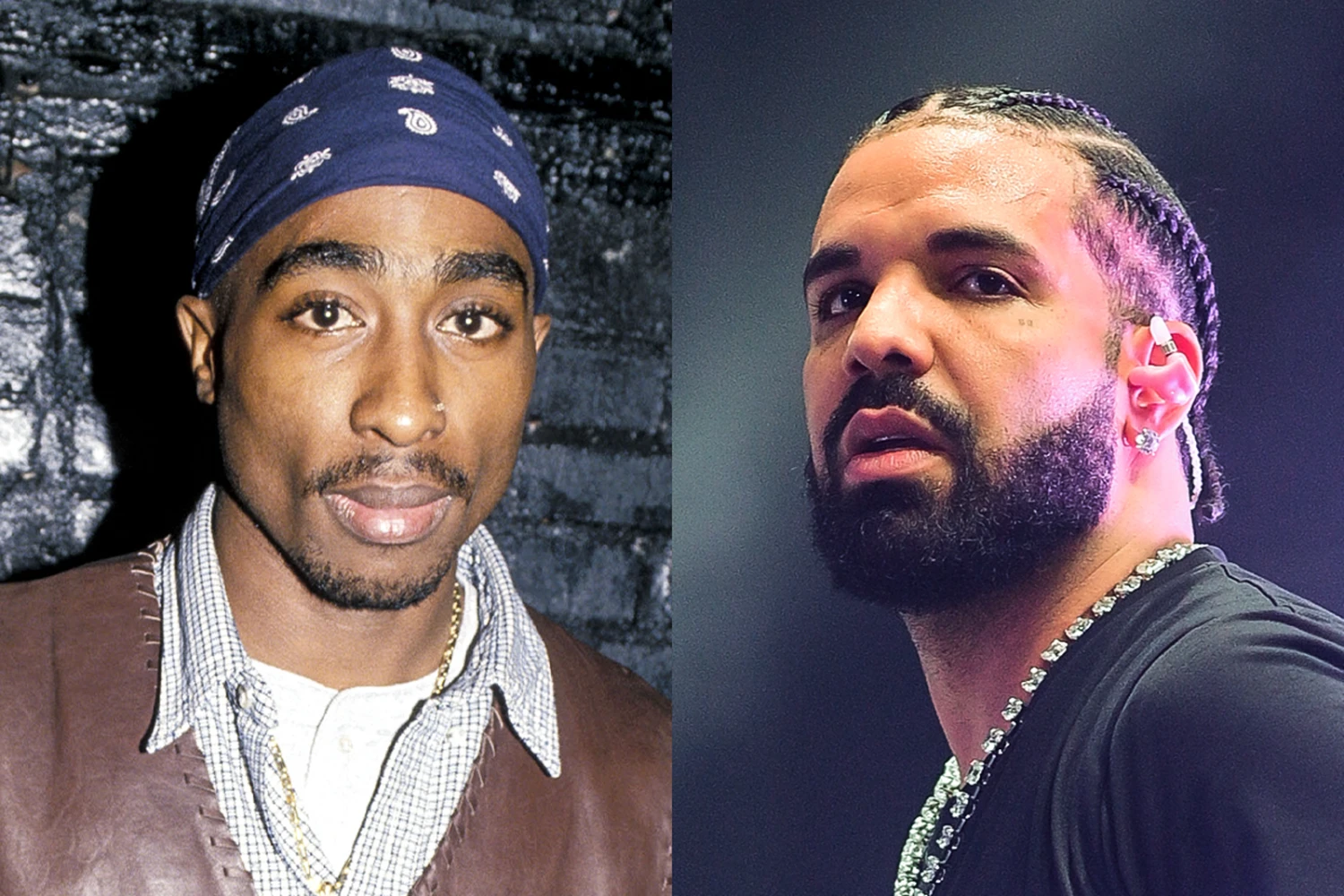

Drake Under Fire: Tupac's Family Threatens Legal Action!

The music industry often stirs its share of controversies, but few involve legal complexities like those surrounding posthumous digital impresionations. …

Business

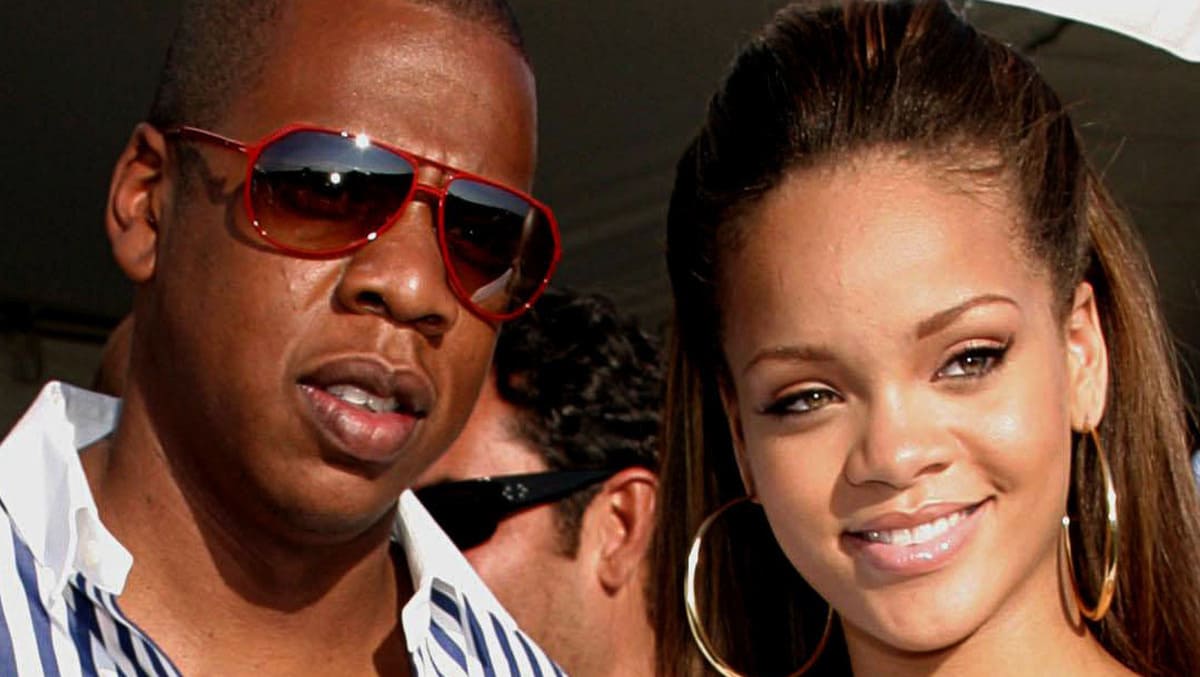

Rihanna And Jay-Z: Forbes Reveals The Amount Of Their Fortune

In the galaxy of stars that twinkle in the financial firmament, two names shine particularly …

Top posts

Arts & Entertainement, Business

Exploring the impressive net worth of singer and actress Ariana Grande

Arts & Entertainement, News

Celine Dion: How Much the Singer Is Worth and How She Makes Her Money

Arts & Entertainement, Business

Inside Mariah Carey’s Sprawling Empire: A Look at Her Net Worth in 2023

Our files

Drake Under Fire: Tupac's Family Threatens Legal Action!

The music industry often stirs its share of controversies, but few involve legal complexities like …

Fashion Icon Simon Jacquemus is a Dad! Meet His Twins, Including One Unusually Named!

A name synonymous with bold minimalism and sun-kissed aesthetics, Simon Jacquemus has revolutionized the fashion …

Vitalik Buterin Faces Setback as $1 Million Gets Stuck in Optimism Bridge!

In a surprising turn of events, Vitalik Buterin, the co-founder of Ethereum, has experienced a …

BlackRock’s Spot Bitcoin ETF Joins Elite Growth Circle

In an industry where volatility is the norm, BlackRock’s spot Bitcoin ETF has emerged as …

End of a Love Story: Why Golden Bachelor’s Gerry Turner & Theresa Nist Are Divorcing !

The Legendary Romance of Gerry Turner and Theresa Nist Their love story started as a …

Uncovering the Mystery of Satoshi Nakamoto, Bitcoin’s Enigmatic Creator

In the world of cryptocurrencies, there is one name that stands above all others: Satoshi …

A Personal Reflection on the Impact of the September 11 Attacks

Memories from a New Yorker’s Perspective As a lifelong resident of New York City, the …

Discovering the Secrets of England’s Most Famous Family in “The Royal House of Windsor”

A Century of The Windsors: Glamour and Scandal To commemorate the 100th year of the …